You are living in a computer simulation.

Nick Bostrom wrote about this in 2001, shortly after The Matrix came out. I highly recommend reading his paper called Are You Living in a Computer Simulation?, which put forth a great logical analysis of why we are (probably) living in a computer simulation. This henceforth became known in philosophy (and Internet culture) as The Simulation Argument.

In summary, Bostrom’s argument is as follows; a technologically advanced civilization can generate realistic simulations of their ancestors. We know this is true, because we are currently capable of generating highly realistic simulations using technology. If such civilizations exist and are capable of running these types of simulations, then the number of simulated consciousnesses would vastly outnumber real consciousnesses, making it statistically very likely that we ourselves are simulated beings.

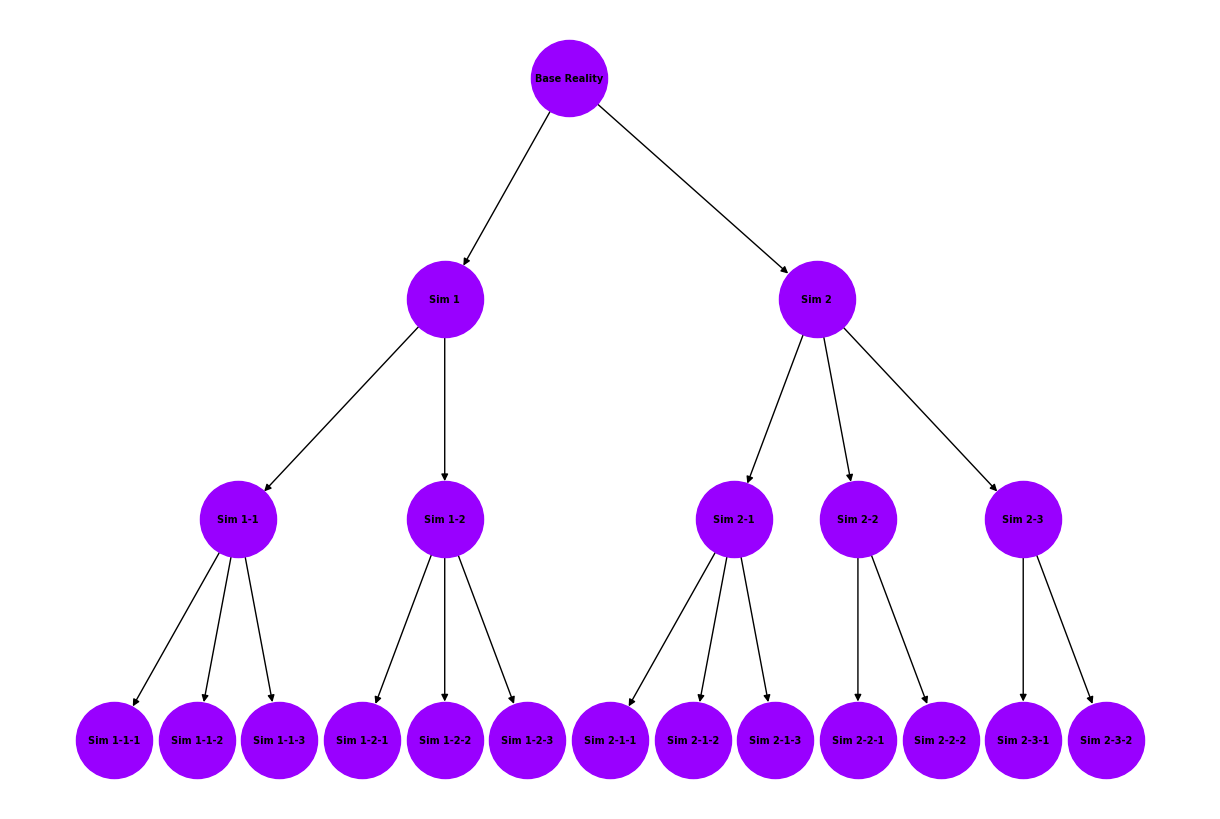

Bostrom’s approach was purely mathematical and statistical. He supposes that if this is true, there are multiple realities at multiple levels. At the root of the tree of realities is some kind of “base reality,” and below it are multiple levels of simulated realities, potentially growing exponentially as each simulation creates multiple simulations of its own.

This argument does not, however, attempt to prove that we are in a simulation or more importantly, why these simulations are created. The Matrix hypothesizes that our simulation was created as a sort of paradise by artificial superintelligence. The reason for the existence of the simulation—because humans are enslaved by artificial superintelligence to be used as a power source—was never really satisfying for me personally. Humans don’t generate that much energy. In fact, the human brain apparently only requires about twenty watts of energy to operate, which is far less than an incandescent light bulb. Even the entire population of the Earth is unlikely to satiate the unbounded energy needs of an artificial superintelligence.

We’re discovering how neural networks work

The concept of using neural networks for machine learning was actually discovered all the way back in 1959 by researchers from Stanford University1. The really interesting thing to me about neural networks is that they seem to be discoveries, not inventions. We designed neural networks to behave as they do today based on prior research about how our brains process information2.

Intuitively, people understand how their brains are able to remember things and obtain new skills. It’s through the process of “training,” which is exposing our senses repeatedly to various patterns of stimuli, or mimicking a certain physical action. We know that for most things, we can’t just observe or attempt it once and be able to perfectly execute it every time after that. Driving an automobile, for example, requires learning how to operate the machine, how to read/interpret street signs, remember rules of the road, etc. Rather terrifyingly, we are continuously learning how to operate automobiles by training our brains on real world data—that is, actually driving around in the world around us.

The study of artificial intelligence took a recognizable path. For robotics, specifically self-driving cars3, there were two approaches. The first was basic machine learning, in which procedural code was written by human engineers for how to drive an automobile. All of the edge cases had to be considered and covered. If a deficiency was discovered (usually in real world testing), an engineer must address the deficiency directly and write a new rule (or tweak an existing rule).

The second approach is using what we discovered about biological neural networks to build an artificial brain to drive a car. In the field, this is known as Deep Learning. Artificial neural networks attempt to mimic human behavior by “learning” on data acquired from the real world. By developing some kind of quantified measurement of progress (a “loss function”) and adjusting the activation thresholds of all the connections between neurons, the network becomes better at predicting outputs based on given inputs.

Amazingly, this actually works. It even works for inputs it’s never seen before. The problem is that this process requires an obscene amount of training data. Significantly more than a human being would require to do the same task. Because of the huge amount of data the training process requires to yield reasonable results, some engineers are attempting to train neural networks based on simulated data in addition to real world data. For example, self-driving cars can be trained on simulated roads from the real world using images generated by a game engine. The game engine tries to match all of the characteristics of the real world as close as possible, from dirt accruing on cameras, lens flares, and poor road conditions.

Something really cool about the internet age is the fact that we humans can now learn how to do things by just watching videos on YouTube. Before YouTube, if you wanted to learn how to cook pizza at home, for example, you had to learn it by watching another person. You could read instructions or tips about how to do it from a book, but such a physical action like cooking really requires utilizing our brains’ mirror neurons and mimic the action of another human being. Knowledge domains especially involving tacit knowledge often rely on apprenticeship for this reason4.

When we’re learning how to do something by watching YouTube videos, we are, in a sense, training ourselves using simulated data. This is, of course, not a perfect substitution for actually attempting to cook pizza ourselves in our own kitchens (or via an apprenticeship), but it is better than nothing. People who are passionate about their fields in fact often utilize both real world data (performing a job, apprenticeship) in addition to simulated data (videos).

As a result of this discovery, there’s a huge push for generating more training data. This isn’t even just for self-driving cars. Simulations are being built for manufacturing, retail, and telecommunications applications. Nvidia is even working on a product they’re calling Digital Twins, where they are opening building a computer simulation of Earth for the expressed interest of training AI models.

We are being used to generate training data

If neural networks are truly discoveries rather than inventions, we appear to be embarking down the same path as our parent reality. They most likely tuned the parameters of this reality to be very similar to their own, and created us in their own image.

The purpose of this is probably because they need training data. We can already suppose that the parent reality has vast computational resources at their disposal. From Bostrom’s paper:

Many works of science fiction as well as some forecasts by serious technologists and futurologists predict that enormous amounts of computing power will be available in the future. Let us suppose for a moment that these predictions are correct. One thing that later generations might do with their super-powerful computers is run detailed simulations of their forebears or of people like their forebears. Because their computers would be so powerful, they could run a great many such simulations.

Therefore, we assume that one of the reasons they have so much computation power is because they built it to train neural networks. With this surplus of computation, they chose to use it to run our simulation and gather more simulated training data for tasks that they may wish to automate in their reality.

What kind of tasks do they want to automate? We might be able to infer this by examining what Ted Kaczynski5 calls the “industrial-technological system,” also known as The System. Anti-capitalists might refer to The System as one of “capitalist exploitation,” whereas libertarians might argue that The System is an Orwellian monopoly constraining individual freedom and autonomy. What all definitions have in common is that there appears to be some unstoppable, almost superhuman force, to make all humans on Earth do something.

Kaczynski attributes the emergence of The System to an evolutionary process6, an inevitable outcome of the agricultural revolution. He contends that The System often perpetuates itself at the expense of human well-being and environmental health. Given the seemingly infinite paths human society can traverse, it raises the question: where does The System, with all its observed phenomena, truly originate from? It’s hard to even imagine what our globalized civilization would be like without its looming presence.

To answer this, it might be helpful to examine what kinds of activities The System seems to require us to do.

- Working in factories. This is tedious, repetitive work that people nonetheless do because it is productive work, and people are rewarded with capital for trading the time to do so.

- A lot of driving. Whether this is a knowledge worker’s daily commute, or a trucker paid to ship product, people are rewarded for doing this, even though most people deem it to be rather boring and repetitive.

- Locomotion. In addition to trucking, a daily active life seems to require a lot of walking and moving around. Americans apparently move between residences more than eleven times in their lifetime7. Often times the impulse for moving residences is financial opportunity.

- Manipulating machines. Whether this is farming, manufacturing, or even retail, people are rewarded for learning how to efficiently operate machines of various kinds.

More recently:

- Writing software. This occupation now seems to pay the most, which suggests an advanced phase of the training process wherein we are now tasked with attempting to automate information processing itself. Now that software developers are working on generative programs (i.e., programs like ChatGPT that can generate literature or even other programs), this suggests some kind of exponential in content generation, especially if the original goal of our simulation was for this purpose.

- Unraveling entropy. Similar to software developers, lawyers are paid to create, then untangle, complex systems. Human beings seem to be uniquely capable of creating order out of chaos, suggesting that one of the purposes of this simulation is for generating negentropy. Experts in the field of unraveling entropy are also rewarded substantially.

In the context of deep learning, a lot of people have noticed that our automation efforts appear to be starting from an unexpected direction. Throughout history, humanity thought the first kinds of jobs to be automated (prophesied by The Jetsons) would be hard labor or “blue collar” jobs. Instead, the first kinds of jobs to be automated appear to be highly cognitive work (“white collar”) like artistry or software development.

This may be evidence that we are not the first reality to discover neural networks and the ability to train machines automate things. If a parent reality really exists, then they used our simulation to automate conscious activity in a much more logical order. This also provides some insight as to what similarities we have with the parent reality, and what their goals might be.

One might be able to look at a simulation existing in one of the following training phases:

- Hard/physical labor (subsistence farming)

- Factory work (operating machines)

- Advanced locomotion (driving, transport)

- Unraveling entropy (software development, law)

Something highly unfalsifiable but interesting to consider is the fact that the resolution of our simulation may not be constant throughout even human history, which would suggest why the training phases enumerated above seem to happen discretely. Science fiction likes to entertain the idea that ancient civilizations (such as those depicted in the Star Wars franchise) are equally capable of developing highly advanced technology if they just had the knowledge of science/physics we had today. However, it might be possible that our simulation was nowhere near the resolution necessary for simulating advanced technology in the first few training phases. Technological leaps like the discovery of electron holes enabling the invention of the semiconductor may not have been possible because our simulation was not operating at the quantum scale yet. This could also suggest why certain leaps in scientific research like the discovery of nuclear energy, the semiconductor, and even calculus, seem to happen almost simultaneously in very disparate parts of an unconnected world.

When the time comes to actually create our simulation (as Nvidia has already done with their digital twin project), the progression of these phases will make much more sense and will likely follow the order above.

One might wonder, if our only purpose is to train neural networks for automating tasks of the parent reality, why do we have a creative urge? Why do humans feel compelled to invent things, and create art? This may also be explained from Nvidia’s “free eBook”:

Gaps in software engineering and 3D design experience are often cited as potential roadblocks to developing a digital twin. However, don’t let this deter you from starting your journey. At NVIDIA, we’re working with a growing number of systems integrators and development partners who are ready to support your organization with these critical skills and experience. Alongside our partners, our teams are also working hard to develop simulation-ready assets and extensions that will make it even easier for your teams to start building digital twins.

In other words, simulated assets are difficult and time consuming to make. Therefore, it makes sense to automate this as well.

The entropy problem

The concept of entropy pertains to the Second Law of Thermodynamics. In summary, entropy is the measure of disorder (also “randomness”) in a physics system. This is an attempt to resolve a discovery about the conservation of energy, where energy apparently can’t be created or destroyed. For example, the reason you can’t use heat energy radiated from your computer to power your computer again is not just because of energy loss/transfer, this energy is said to be less useful than the energy that was fed in through the power supply, because it’s in a less concentrated quantity.

The unfortunate thing about entropy is that it is always increasing. This is easy to intuit by thinking about processes that convert useful energy into mechanical work (like machines), as well as exothermic chemical reactions. Useful energy in both scenarios is eventually depleted. There is no known way to reverse this.

With regards to the simulation argument, from the existence of entropy we can draw two potential conclusions. One is that entropy is an emergent behavior of some kind of computational limit (and thus implying that entropy doesn’t necessarily exist in the parent simulation). We know that computing resources are scarce in our world, albeit mostly due to these laws of thermodynamics. It’s possible that computing resources are finite in the parent reality as well, and these laws of thermodynamics serve as some kind of “governor” for physical computation.

The second, more compelling conclusion, is that entropy exists in the parent simulation as well, and these laws were “written” by them. In systems of high entropy, it may be impossible or inefficient to expend resources training neural networks based on real world data. Either there simply isn’t enough useful energy left to devote to this effort, or energy resources are being rationed in some way. A real-world8 metaphor for this might be to imagine a scenario where Waymo wants to gather training data for their self-driving cars, but gasoline and electricity are at prohibitively high prices due to an extreme scarcity of energy. The only option for Waymo at that point would be to build a simulation.

In addition to simulating a lower entropy environment for gathering training data, having an artificial intelligence (that might be us) devote significant cognitive resources to solving ways of economizing useful energy and inventing ways of generating negentropy might be a good way to prolong the heat death of their universe.

The parent reality

A great question arises when pondering this; what is the parent reality like? Most likely all of the physical constants of the parent reality are the same or very close, otherwise the training data generated by our simulation wouldn’t be useful. For the same reason, we (humans) are most likely created in the image of the intelligent inhabitants of the parent reality. At the very least, they most likely have humanoid characteristics, something resembling two arms, two legs, binocular vision, etc. Other strange, unexplained phenomena like why we sleep, what our motivations are, how we evolved, etc., might be mysterious because they serve some kind of purpose in our reality, but aren’t features present in the parent reality.

In fact, we take for granted that many other mysteries exist in our reality, such as the concept of infinity, the nature of our existence (why are we here?), and the origin of the universe (“Big Bang Theory”). These mysteries may simply not even exist in the parent reality, as there is no reason for them to necessarily be obscured. Alternatively, the mysteries of our universe may be a confusing result of a negative set of features that the parent reality decided not to simulate for some reason.

We must also assume that if The System was somehow designed by the parent reality as the basis for our motivation to generate training data, that this “System” doesn’t exist in the parent reality. If so, this has really profound implications about how they might organize their society (if there even is a society) and what their motivations in life are.

We should reverse engineer our file format

Since the simulation argument is unfalsifiable, the entire study of this is, of course, exclusively in the realm of belief. However, by living one’s life with the assumption that we’re in a computer simulation, and thinking really hard about why such a simulation exists, we could create a useful secular (?) narrative for the 21st century. Just as the nuclear-powered space age served as an incredibly motivating narrative for the 20th century, operating with the assumption that the rules of our universe are no different than that of a video game, it could lead to a real explosion of creativity and technological development.

For this reason, the increasingly popular study of speedrunning, while currently seeming to be just a fascinating hobby, may actually be an extremely productive pursuit. On of the most interesting examples of this is this video of someone playing Super Mario World.

By exclusively manipulating objects in Mario’s reality and obeying all of the laws of physics in his simulation, the speedrunner in this video was able to inject completely arbitrary code. If Mario is able to do this in his simulation, why couldn’t we do this in ours?

There’s a saying that physics is the law, everything else is just a recommendation. Perhaps the only thing that is law is The System, and everything else (including physics) is a recommendation. As long as we continue to provide useful training data to our parent simulation, we might be free to subvert nature in any way that we please.

If you found this entertaining, be sure to check out George Hotz’s humorous talk about Jailbreaking the Simulation. Science fiction author Phillip K. Dick had a really fascinating schizophrenic episode that he discussed in great detail during a talk in France in 1977, where he came up with a lot of ideas present in The Matrix movie series. Obviously, you should watch The Matrix if you haven’t already, but another great movie that discusses this topic is The Thirteenth Floor released the same year. This fascinating interview with a UFO guy covers echos of simulation hypothesis thinking throughout several ages of history.

-

Widrow, Bernard, and Marcian E. Hoff. “Adaptive Switching Circuits.” Stanford University, 1960. ↩︎

-

There are two exciting ways to interpret this. If you are secular, then it appears the process of evolution really works, and the products of nature are hard to beat with human ingenuity alone. If you are religious, then it appears that God really is the “ultimate engineer,” and the discipline of engineering is really just a process of imitation. ↩︎

-

For a good history on self-driving cars (before deep learning), check out Larry Burns’s Autonomy: The Quest to Build the Driverless Car—And How It Will Reshape Our World. ↩︎

-

Could streaming help convey tacit knowledge? by Andy Matuschak. Andy writes a lot about learning and education and is a big inspiration of mine. ↩︎

-

Kaczynski was also infamously known as “The Unabomber” because of his violent attempt to overthrow this system. I do not condone this! ↩︎

-

United States Census Bureau: “Calculating Migration Expectancy Using ACS Data” ↩︎

-

Morpheus: “What is real?” ↩︎